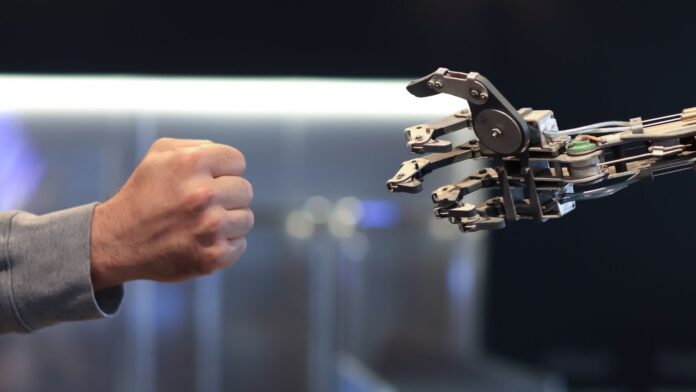

If 2025 was the year organizations flirted with artificial intelligence, 2026 will be the year communicators are judged on whether they can balance it with something machines still cannot convincingly replicate: human authenticity.

AI is now embedded in the daily work of public relations and reputation management. It drafts press releases in seconds, monitors sentiment in real time, flags potential crises before they trend and optimizes message distribution with machine precision. These capabilities are no longer futuristic. They are expected. The real question is no longer whether communicators will use AI, but how they will use it without losing their voice, values and credibility.

This tension will define the practice of PR in 2026.

Consider a familiar scenario. A company faces public criticism after a service failure. Within minutes, AI tools generate a technically flawless holding statement: an empathetic opening line, acknowledgment of concern, assurance of investigation and a promise to improve. It ticks every box. Yet once released, it triggers a second wave of backlash. People describe it as cold, generic or insincere. Why? Because it sounds like it was produced by a system, not by people who genuinely understood the frustration caused.

Contrast this with another response we increasingly see. The initial statement is slower, shorter and more human. It admits uncertainty. It avoids overpromising. It uses plain language. Behind the scenes, AI still plays a role by scanning sentiment, identifying affected stakeholders and modeling escalation risks. But the final decision on tone, timing and content is human. The difference is not technological sophistication. It is authorship.

In 2026, audiences will be far less forgiving of messages that feel optimized but empty.

The same dynamic is playing out in internal communication. Many organizations now use AI to generate town hall scripts, leadership emails and even performance messages. On paper, this increases efficiency and consistency. In practice, employees quickly notice when leaders sound polished yet emotionally distant. A CEO message written with AI assistance but delivered without personal context often lands flat. Meanwhile, a less eloquent note that references specific team struggles, shared fatigue or recent wins resonates far more deeply.

Employees, like consumers, are becoming skilled detectors of authenticity. They may not know how AI works, but they know when a message feels borrowed rather than owned.

Crisis communication provides perhaps the clearest illustration of why balance matters. AI can analyze historical crises and suggest response frameworks that minimized reputational damage in the past. That is powerful. But crises are not purely technical problems. They are moral moments. When safety, livelihoods or dignity are at stake, repeating what worked before may be reputationally disastrous.

Imagine a scenario where data shows that issuing an apology within six hours reduces negative sentiment. AI will recommend speed. A human communicator might pause and recognize that the facts are incomplete, emotions are raw and any premature statement could appear defensive or self-protecting. In 2026, restraint will be as important as responsiveness. Machines optimize for metrics. Humans must optimize for trust.

The same applies to social media. AI can tell brands what topics are trending, what formats perform best and what language drives engagement. The result is a flood of timely, clever and highly forgettable content. We have all seen brands rush to comment on sensitive issues simply because the algorithm rewards relevance. The backlash that follows is often not about the position taken, but about the perceived opportunism.

By contrast, brands that choose silence, or a carefully considered response, often earn respect precisely because they resisted the pressure to perform. In a machine accelerated environment, choosing not to speak can be a deeply human act.

This is why the danger in 2026 is not overusing AI, but outsourcing judgment to it.

We are already seeing the consequences – corporate apologies that feel legally safe but emotionally absent; purpose statements that sound aspirational yet disconnected from actual behavior and influencer partnerships selected by data but misaligned with brand values. These are not failures of technology. They are failures of governance.

The best communicators in 2026 will treat AI as an intelligence layer, not a moral compass.

Practically, this means clear rules of engagement. AI can draft, but humans must own the final voice. AI can recommend, but humans must decide. AI can accelerate listening, but humans must interpret meaning. Organizations that blur these boundaries risk producing communication that is efficient, consistent and ultimately untrustworthy.

Reputation itself is also changing. We are moving away from a world where perception was shaped mainly by deliberate campaigns. Today, reputation is increasingly shaped by fragments: a clipped video, a resurfaced quote, a forwarded message stripped of context. AI accelerates this exposure and surfaces inconsistencies instantly. In this environment, authenticity is no longer something you declare. It is something others infer over time.

This raises the stakes. AI assisted communication can amplify truth, but it can also expose gaps between words and actions faster than ever before. Organizations that rely on machine polished messaging without aligning behavior will find themselves unmasked.

The upside, however, is substantial.

Communicators who master the balance between human authenticity and machine intelligence will operate with both speed and soul. They will listen better, anticipate risks earlier and respond more strategically. More importantly, they will preserve the one asset no algorithm can manufacture: trust.

In 2026, credibility will not come from sounding smart. It will come from sounding real. Trust will not be built by volume, but by consistency. Reputation will not be protected by tools alone, but by the values guiding their use.

Those who get this balance right will not just manage reputation. They will earn it.